The DistilBERT Model: A Promising Approach to Improve Machine Reading Comprehension Models

Main Article Content

Abstract

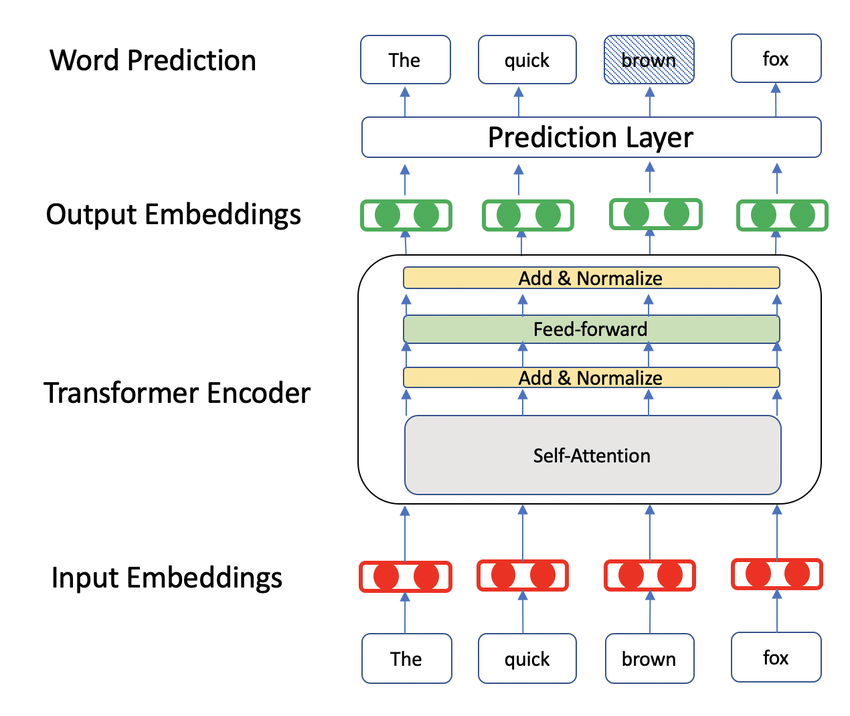

Machine Reading Comprehension (MRC) is a challenging task in the field of Natural Language Processing (NLP), where a machine is required to read a given text passage and answer a set of questions based on it. This paper provides an overview of recent advances in MRC and highlights some of the key challenges and future directions of this research area. It also evaluates the performance of several baseline models on the dataset, evaluates the challenges that the dataset poses for existing MRC models, and introduces the DistilBERT model to improve the accuracy of the answer extraction process. The supervised paradigm for training machine reading and comprehension models represents a practical path forward for creating comprehensive natural language understanding systems. To enhance the DistilBERT basic model's functionality, we have experimented with a variety of question heads that differ in the number of layers, activation function, and general structure. DistilBERT is a model for question-resolution tasks that is successful and delivers state-of-the-art performance while requiring less computational resources than large models like BERT, according to the presented technique. We could enhance the model's functionality and obtain a better understanding of how the model functions by investigating other question head architectures. These findings could serve as a foundation for future study on how to make question-and-answer systems and other tasks connected to the processing of natural languages.

Article Details

References

Zhang X, Yang A, Li S, Wang Y. Machine reading comprehension: a literature review. arXiv preprint arXiv:1907.01686. 2019 Jun 30.

Niu Y, Jiao F, Zhou M, Yao T, Xu J, Huang M. A self-training method for machine reading comprehension with soft evidence extraction. arXiv preprint arXiv:2005.05189. 2020 May 11.

Hermann KM, Kocisky T, Grefenstette E, Espeholt L, Kay W, Suleyman M, Blunsom P. Teaching machines to read and comprehend. Advances in neural information processing systems. 2015;28.

Shen Y, Huang XJ. Attention-based convolutional neural network for semantic relation extraction. InProceedings of COLING 2016, the 26th International Conference on Computational Linguistics: Technical Papers 2016 Dec (pp. 2526-2536).

Koš?evi? K, Subaši? M, Lon?ari? S. Attention-based convolutional neural network for computer vision color constancy. In2019 11th International Symposium on Image and Signal Processing and Analysis (ISPA) 2019 Sep 23 (pp. 372-377). IEEE.

Li Z, Liu F, Yang W, Peng S, Zhou J. A survey of convolutional neural networks: analysis, applications, and prospects. IEEE transactions on neural networks and learning systems. 2021 Jun 10.

Hermann KM, Kocisky T, Grefenstette E, Espeholt L, Kay W, Suleyman M, Blunsom P. Teaching machines to read and comprehend. Advances in neural information processing systems. 2015;28.

Rajpurkar P, Zhang J, Lopyrev K, Liang P. Squad: 100,000+ questions for machine comprehension of text. arXiv preprint arXiv:1606.05250. 2016 Jun 16.

Nguyen T, Rosenberg M, Song X, Gao J, Tiwary S, Majumder R, Deng L. MS MARCO: A human generated machine reading comprehension dataset. choice. 2016 Nov;2640:660.

Trischler A, Wang T, Yuan X, Harris J, Sordoni A, Bachman P, Suleman K. Newsqa: A machine comprehension dataset. arXiv preprint arXiv:1611.09830. 2016 Nov 29.

Ko?iský T, Schwarz J, Blunsom P, Dyer C, Hermann KM, Melis G, Grefenstette E. The narrativeqa reading comprehension challenge. Transactions of the Association for Computational Linguistics. 2018 Jan 1;6:317-28.

Joshi M, Choi E, Weld DS, Zettlemoyer L. Triviaqa: A large scale distantly supervised challenge dataset for reading comprehension. arXiv preprint arXiv:1705.03551. 2017 May 9.

Bauer L, Wang Y, Bansal M. Commonsense for generative multi-hop question answering tasks. arXiv preprint arXiv:1809.06309. 2018 Sep 17.

Reddy S, Chen D, Manning CD. Coqa: A conversational question answering challenge. Transactions of the Association for Computational Linguistics. 2019 Aug 1;7:249-66.

Rajpurkar P, Jia R, Liang P. Know what you don't know: Unanswerable questions for SQuAD. arXiv preprint arXiv:1806.03822. 2018 Jun 11.

Shang S, Liu J, Yang Y. Multi-layer transformer aggregation encoder for answer generation. IEEE Access. 2020 May 11;8:90410-9.

Dhabliya, D. (2021). Feature Selection Intrusion Detection System for The Attack Classification with Data Summarization. Machine Learning Applications in Engineering Education and Management, 1(1), 20–25. Retrieved from http://yashikajournals.com/index.php/mlaeem/article/view/8.

Egonmwan E, Castelli V, Sultan MA. Cross-task knowledge transfer for query-based text summarization. InProceedings of the 2nd Workshop on Machine Reading for Question Answering 2019 Nov (pp. 72-77).

Qi P, Lin X, Mehr L, Wang Z, Manning CD. Answering complex open-domain questions through iterative query generation. arXiv preprint arXiv:1910.07000. 2019 Oct 15.

Yang Z, Dai Z, Yang Y, Carbonell J, Salakhutdinov RR, Le QV. Xlnet: Generalized autoregressive pretraining for language understanding. Advances in neural information processing systems. 2019;32.

Liu X, Cheng H, He P, Chen W, Wang Y, Poon H, Gao J. Adversarial training for large neural language models. arXiv preprint arXiv:2004.08994. 2020 Apr 20.

Xu H, Liu B, Shu L, Yu PS. BERT post-training for review reading comprehension and aspect-based sentiment analysis. arXiv preprint arXiv:1904.02232. 2019 Apr 3.

Yan Z, Ma J, Zhang Y, Shen J. SQL Generation via Machine Reading Comprehension. InProceedings of the 28th International Conference on Computational Linguistics 2020 Dec (pp. 350-356).

Xu S, Xu G, Jia P, Ding W, Wu Z, Liu Z. Automatic task requirements writing evaluation via machine reading comprehension. InArtificial Intelligence in Education: 22nd International Conference, AIED 2021, Utrecht, The Netherlands, June 14–18, 2021, Proceedings, Part I 22 2021 (pp. 446-458). Springer International Publishing.

Ana Oliveira, Yosef Ben-David, Susan Smit , Elena Popova, Milica Mili?. Machine Learning for Decision Optimization in Complex Systems. Kuwait Journal of Machine Learning, 2(3). Retrieved from http://kuwaitjournals.com/index.php/kjml/article/view/201.

Schlegel V, Nenadic G, Batista-Navarro R. Semantics altering modifications for evaluating comprehension in machine reading. InProceedings of the AAAI Conference on Artificial Intelligence 2021 May 18 (Vol. 35, No. 15, pp. 13762-13770).

Kamfonas M, Alon G. What Can Secondary Predictions Tell Us? An Exploration on Question-Answering with SQuAD-v2. 0. arXiv preprint arXiv:2206.14348. 2022 Jun 29.

Yuan S, Yang D, Liang J, Sun J, Huang J, Cao K, Xiao Y, Xie R. Large-Scale Multi-granular Concept Extraction Based on Machine Reading Comprehension. InThe Semantic Web–ISWC 2021: 20th International Semantic Web Conference, ISWC 2021, Virtual Event, October 24–28, 2021, Proceedings 2021 Sep 30 (pp. 93-110). Cham: Springer International Publishing.

Sun T, He Z, Zhu Q, Qiu X, Huang X. Multi-Task Pre-Training of Modular Prompt for Few-Shot Learning. arXiv preprint arXiv:2210.07565. 2022 Oct 14.

Devlin J, Chang MW, Lee K, Toutanova K. Bert: Pre-training of deep bidirectional transformers for language understanding. arXiv preprint arXiv:1810.04805. 2018 Oct 11.

Liu X, He P, Chen W, Gao J. Multi-task deep neural networks for natural language understanding. arXiv preprint arXiv:1901.11504. 2019 Jan 31.

Gao, Y., Liu, X., Duan, N., & Gao, J. (2020). Multi-Task Learning for Script Event Understanding with Rich Syntactic and Semantic Representations. Proceedings of the 58th Annual Meeting of the Association for Computational Linguistics (ACL), 3158-3167

Welbl, J., Stenetorp, P., & Riedel, S. (2018). Constructing Datasets for Multi-hop Reading Comprehension Across Documents. Proceedings of the Conference on Empirical Methods in Natural Language Processing (EMNLP), 3202-3213

Reddy, S., Chen, D., Manning, C. D. (2019). CoQA: A Conversational Question Answering Challenge. Proceedings of the Conference on Empirical Methods in Natural Language Processing and the 9th International Joint Conference on Natural Language Processing (EMNLP-IJCNLP), 3543-3552.

Seo M, Kembhavi A, Farhadi A, Hajishirzi H. Bidirectional attention flow for machine comprehension. arXiv preprint arXiv:1611.01603. 2016 Nov 5.

Nogueira R, Cho K. Passage Re-ranking with BERT. arXiv preprint arXiv:1901.04085. 2019 Jan 13.

Li Z, Pan X. Some remarks on regularity criteria of axially symmetric Navier-Stokes equations. arXiv preprint arXiv:1805.10752. 2018 May 28.

Dhiman, O. ., & Sharma, D. A. . (2020). Detection of Gliomas in Spinal Cord Using U-Net++ Segmentation with Xg Boost Classification. Research Journal of Computer Systems and Engineering, 1(1), 17–22. Retrieved from https://technicaljournals.org/RJCSE/index.php/journal/article/view/20

Zhang Y. Note on equivalences for degenerations of Calabi-Yau manifolds. arXiv preprint arXiv:1711.00503. 2017 Nov 1.

Li S, Wu L, Feng S, Xu F, Xu F, Zhong S. Graph-to-tree neural networks for learning structured input-output translation with applications to semantic parsing and math word problem. arXiv preprint arXiv:2004.13781. 2020 Apr 7.

Miyaji T, Herrera-Endoqui M, Krumpe M, Hanzawa M, Shogaki A, Matsuura S, Tanimoto A, Ueda Y, Ishigaki T, Barrufet L, Brunner H. Torus Constraints in ANEPD-CXO245: A Compton-thick AGN with Double-peaked Narrow Lines. The Astrophysical Journal Letters. 2019 Oct 7;884(1):L10

Yu AW, Dohan D, Luong MT, Zhao R, Chen K, Norouzi M, Le QV. Qanet: Combining local convolution with global self-attention for reading comprehension. arXiv preprint arXiv:1804.09541. 2018 Apr 23.

Shang M, Li P, Fu Z, Bing L, Zhao D, Shi S, Yan R. Semi-supervised text style transfer: Cross projection in latent space. arXiv preprint arXiv:1909.11493. 2019 Sep 25.

Shen D, Celikyilmaz A, Zhang Y, Chen L, Wang X, Gao J, Carin L. Towards generating long and coherent text with multi-level latent variable models. arXiv preprint arXiv:1902.00154. 2019 Feb 1.

Gururangan S, Swayamdipta S, Levy O, Schwartz R, Bowman SR, Smith NA. Annotation artifacts in natural language inference data. arXiv preprint arXiv:1803.02324. 2018 Mar 6.

Wang W, Yan M, Wu C. Multi-granularity hierarchical attention fusion networks for reading comprehension and question answering. arXiv preprint arXiv:1811.11934. 2018 Nov 29.

Naseem T, Shah A, Wan H, Florian R, Roukos S, Ballesteros M. Rewarding Smatch: Transition-based AMR parsing with reinforcement learning. arXiv preprint arXiv:1905.13370. 2019 May 31.

Sofia Martinez, Machine Learning-based Fraud Detection in Financial Transactions , Machine Learning Applications Conference Proceedings, Vol 1 2021.

Liu NF, Gardner M, Belinkov Y, Peters ME, Smith NA. Linguistic knowledge and transferability of contextual representations. arXiv preprint arXiv:1903.08855. 2019 Mar 21.

R-NET: MACHINE READING COMPREHENSION WITH SELF-MATCHING NETWORKS by Natural Language Computing Group, Microsoft Research Asia, 2017

Aronna MS, Bonnans JF, Kröner A. State-constrained control-affine parabolic problems I: first and second order necessary optimality conditions. Set-Valued and Variational Analysis. 2021 Jun;29(2):383-408.

Takanobu R, Liang R, Huang M. Multi-agent task-oriented dialog policy learning with role-aware reward decomposition. arXiv preprint arXiv:2004.03809. 2020 Apr 8.

Abhijith, G. S. V. ., & Gundad, A. K. V. . (2023). Data Mining for Emotional Analysis of Big Data. International Journal of Intelligent Systems and Applications in Engineering, 11(3s), 271–279. Retrieved from https://ijisae.org/index.php/IJISAE/article/view/2684

Azatov A, Bardhan D, Ghosh D, Sgarlata F, Venturini E. Anatomy of b? c ? ? anomalies. Journal of High Energy Physics. 2018 Nov;2018(11):1-54.

Yang Z, Dai Z, Yang Y, Carbonell J, Salakhutdinov RR, Le QV. Xlnet: Generalized autoregressive pretraining for language understanding. Advances in neural information processing systems. 2019;32.

Guu K, Lee K, Tung Z, Pasupat P, Chang M. Retrieval augmented language model pre-training. InInternational conference on machine learning 2020 Nov 21 (pp. 3929-3938). PMLR.6

Wei M, Yuan C, Yue X, Zhong K. Hose-net: Higher order structure embedded network for scene graph generation. InProceedings of the 28th ACM International Conference on Multimedia 2020 Oct 12 (pp. 1846-1854)

Lan Z, Chen M, Goodman S, Gimpel K, Sharma P, Soricut R. Albert: A lite bert for self-supervised learning of language representations. arXiv preprint arXiv:1909.11942. 2019 Sep 26.

Zippilli S, Vitali D. Possibility to generate any Gaussian cluster state by a multimode squeezing transformation. Physical Review A. 2020 Nov 30;102(5):052424.

Wang H, Wang J. Topological bands in two-dimensional orbital-active bipartite lattices. Physical Review B. 2021 Feb 18;103(8):L081109.

Dr. Bhushan Bandre. (2013). Design and Analysis of Low Power Energy Efficient Braun Multiplier. International Journal of New Practices in Management and Engineering, 2(01), 08 - 16. Retrieved from http://ijnpme.org/index.php/IJNPME/article/view/12.

Christianos F, Schäfer L, Albrecht S. Shared experience actor-critic for multi-agent reinforcement learning. Advances in neural information processing systems. 2020;33:10707-17.

Liu Y, Ott M, Goyal N, Du J, Joshi M, Chen D, Levy O, Lewis M, Zettlemoyer L, Stoyanov V. Roberta: A robustly optimized bert pretraining approach. arXiv preprint arXiv:1907.11692. 2019 Jul 26.

Lewis, P., Oguz, B., Rinott, R., & Riedel, S. (2020). Evaluating the state-of-the-art on the Natural Questions benchmark. Transactions of the Association for Computational Linguistics, 8, 423-438.

Sun Y, Wang S, Li Y, Feng S, Tian H, Wu H, Wang H. Ernie 2.0: A continual pre-training framework for language understanding. InProceedings of the AAAI conference on artificial intelligence 2020 Apr 3 (Vol. 34, No. 05, pp. 8968-8975).

Bisk, Y., Holtzman, A., Schwartz, R., Kyunghyun, C., & Smith, N. A. (2020). Minimally supervised learning of representations for structured data. In Proceedings of the 2020 Conference on Empirical Methods in Natural Language Processing (EMNLP) (pp. 7816-7826).

Brown T, Mann B, Ryder N, Subbiah M, Kaplan JD, Dhariwal P, Neelakantan A, Shyam P, Sastry G, Askell A, Agarwal S. Language models are few-shot learners. Advances in neural information processing systems. 2020;33:1877-901.

Nath S. Preference Estimation in Deferred Acceptance with Partial School Rankings. arXiv preprint arXiv:2010.15960. 2020 Oct 29.

Wang S, Yu M, Guo X, Wang Z, Klinger T, Zhang W, Chang S, Tesauro G, Zhou B, Jiang J. R 3: Reinforced ranker-reader for open-domain question answering. InProceedings of the AAAI Conference on Artificial Intelligence 2018 Apr 26 (Vol. 32, No. 1).

Devlin J, Chang MW, Lee K, Toutanova K. Bert: Pre-training of deep bidirectional transformers for language understanding. arXiv preprint arXiv:1810.04805. 2018 Oct 11.

Sanh V, Debut L, Chaumond J, Wolf T. DistilBERT, a distilled version of BERT: smaller, faster, cheaper and lighter. arXiv preprint arXiv:1910.01108. 2019 Oct 2.