WeedFocusNet: A Revolutionary Approach using the Attention-Driven ResNet152V2 Transfer Learning

Main Article Content

Abstract

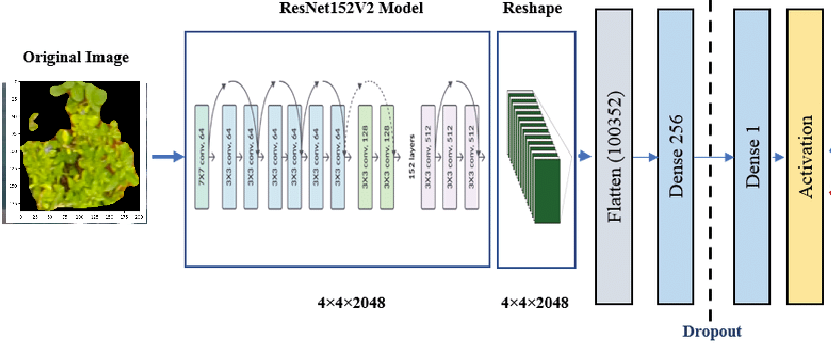

The advancement of modern agriculture is heavily dependent on accurate weed detection, which contributes to efficient resource utilization and increased crop yield. Traditional methods, however, often need more accuracy and efficiency. This paper presents WeedFocusNet, an innovative approach that leverages attention-driven ResNet152V2 transfer learning addresses these challenges. This approach enhances model generalization and focuses on critical features for weed identification, thereby overcoming the limitations of existing methods. The objective is to develop a model that enhances weed detection accuracy and optimizes computational efficiency. WeedFocusNet, a novel deep-learning model, performs weed detection better by employing attention-driven transfer learning based on the ResNet152V2 architecture. The model integrates an attention module, concentrating its predictions on the most significant image features. Evaluated on a dataset of weed and crop images, WeedFocusNet achieved an accuracy of 99.28%, significantly outperforming previous methods and models, such as MobileNetV2, ResNet50, and custom CNN models, in terms of accuracy, time complexity, and memory usage, despite its larger memory footprint. These results emphasize the transformative potential of WeedFocusNet as a powerful approach for automating weed detection in agricultural fields.

Article Details

References

FAO. (2020). The State of Agricultural Commodity Markets 2020.Rome.https://www.fao.org/resources/digital-reports/state-of-agricultural-commodity-markets/en.

Ministry of Agriculture & Farmers Welfare, Government of India. (2020). Agriculture Census 2015-16 (Phase-I). New Delhi, India. https://agcensus.nic.in/document/agcen1516/T1_ac_2015_16.pdf

Eurostat. (2021). Agriculture, forestry and fishery statistics 2020 edition. Luxembourg: Publications Office of the EuropeanUnion. https://ec.europa.eu/eurostat/documents/3217494/11478054/KS-FK-20-001-EN-N.pdf

National Bureau of Statistics of China. (2020). China Statistical Yearbook-2020. Beijing, China. http://www.stats.gov.cn/tjsj/ndsj/2020/indexeh.htm

Nitin Rai, Yu Zhang, Billy G. Ram, Leon Schumacher, Ravi K. Yellavajjala, Sreekala Bajwa, Xin Sun, Applications of deep learning in precision weed management: A review, Computers, and Electronics in Agriculture, Volume 206, 2023, 107698, ISSN 0168-1699, https://doi.org/10.1016/j.compag.2023.107698.

Paheding Sidike, Vasit Sagan, Maitiniyazi Maimaitijiang, Matthew Maimaitiyiming, Nadia Shakoor, Joel Burken, Todd Mockler, Felix B. Fritschi, dPEN: deep Progressively Expanded Network for mapping heterogeneous agricultural landscape using WorldView-3 satellite imagery, Remote Sensing of Environment, Volume 221,2019, Pages 756-772, ISSN 0034-4257, https://doi.org/10.1016/j.rse.2018.11.031.

María Pérez-Ortiz, José Manuel Peña, Pedro Antonio Gutiérrez, Jorge Torres-Sánchez, César Hervás-Martínez, and Francisca López-Granados. 2016. Selecting patterns and features for between- and within-crop-row weed mapping using UAV imagery. Expert Syst. Appl. 47, C (April 2016), 85–94. https://doi.org/10.1016/j.eswa.2015.10.043

Miliots, A., Lottes, P., & Stachniss, C. (2018). Real-time semantic segmentation of crop and weed for precision agriculture robots leveraging background knowledge in CNNs. In 2018 IEEE International Conference on Robotics and Automation (ICRA) (pp. 2229-2235). IEEE.

He, K., Zhang, X., Ren, S., & Sun, J. (2016). Deep residual learning for image recognition. In Proceedings of the IEEE conference on computer vision and pattern recognition (pp.770-778). IEEE.

Bakhshipour, A., & Jafari, A. (2018). Evaluation of support vector machine and arti?cial neural networks in weed detection using shape features. Computers and Electronics in Agriculture,145, 153–160.

Bakhshipour, A., Jafari, A., Nassiri, S. M., & Zare, D. (2017). Weed segmentation using texture features extracted from wavelet sub-images. Biosystems Engineering,157, 1–12.

Albanese, A., Nardello, M., Brunelli, D., 2021. Automated pest detection with DNN on the edge for precision agriculture. IEEE J. Emerg. Sel. Topics Power Electron. 11, 458–467. 10.48550/arXiv.2108.00421.

Yang, B., Xu, Y., 2021. Applications of deep-learning approaches in horticultural research: A review. Hortic. Res. 8, 123. https://doi.org/10.1038/s41438-021- 00560-9.

Jiang, Y., Li, C., 2020. Convolutional neural networks for image-based high-throughput plant phenotyping: A review. Plant Phenomics, 4152816. 10.34133/2020/4152816.

Chowdhury, M.E.H., Rahman, T., Khandakar, A., Ayari, M.A., Khan, A.U., Khan, M.S., Al- Emadi, N., Reaz, M.B., Islam, M.T., Ali, S.H., 2021. Automatic and reliable leaf disease detection using deep learning techniques (special issue). AgriEngineering 3 (2), 294–312. https://doi.org/10.3390/agriengineering3020020.

Liu, J., Wang, X., 2021. Plant diseases and pests detection based on deep learning: A review. Plant Methods 17, 22. https://doi.org/10.1186/s13007-021-00722-9.

David, E., Daubige, G., Joudelat, F., Burger, P., Comar, A., de Solan, B., Baret, F., 2021. Plant detection and counting from high-resolution RGB images acquired from UAVs: Comparison between deep-learning and handcrafted methods with application to maize, sugar beet, and sunflower crops. 10.1101/2021.04.27.441631.

Rai, N., Flores, P., 2021. Leveraging transfer learning in ArcGIS Pro to detect “doubles” in a sunflower field. In: 2021 ASABE Annual International Virtual Meeting, ASABE Paper No. 2100742. 10.13031/aim.202100742.

Bah, M., Hafiane, A., Canals, R., 2019. CRowNet: Deep network for crop row detection in UAV images. IEEE Access 8, 1. https://doi.org/10.1109/ACCESS.2019.2960873.

Pang, Y., Shi, Y., Gao, S., Jiang, F., Sivakumar, A.N.V., Thompson, L., Luck, J., Liu, C., 2020. Improved crop row detection with deep neural network for early-season maize stand count in UAV imagery. Comput. Electron. Agric. 178, 105766 https://doi.org/ 10.1016/j.compag.2020.105766.

Hemalatha, S. ., Tamilselvi, T. ., Kumar, R. S. ., Julaiha M. E, A. G. N. ., Thangamani, M. ., Lakshmi , S. ., & Gulati, K. . (2023). Assistive Tools for Machine Communication for Preventing Children and Disabled Persons from Electric Hazard Using Cyber Physical System. International Journal of Intelligent Systems and Applications in Engineering, 11(3s), 155–160. Retrieved from https://ijisae.org/index.php/IJISAE/article/view/2554

Gao, Z., Luo, Z., Zhang, W., Lv, Z., Xu, Y., 2020. Deep learning application in plant stress imaging: A review. AgriEngineering.https://doi.org/10.3390/ agriengineering2030029.

Butte, S., Vakanski, A., Duellman, K., Wang, H., Mirkouei, A., 2021. Potato crop stress identification in aerial images using deep learning-based object detection. Agron. J. https://doi.org/10.1002/agj2.20841.

Ismail, N., Malik, O.A., 2021. Real-time visual inspection system for grading fruits using computer vision and deep learning techniques. Inf. Process. Agric. 10.1016/j. inpa.2021.01.005.

Sa, I., Ge, Z., Dayoub, F., Upcroft, B., Perez, T., McCool, C., 2016. DeepFruits: A fruit detection system using deep neural networks. Sensors (Basel, Switzerland) 16, 1222. https://doi.org/10.3390/s16081222.

Onishi, Y., Yoshida, T., Kurita, H., Fukao, T., Arihara, H., Iwai, A., 2019. An automated fruit harvesting robot by using deep learning. ROBOMECH J. 6, 13. https://doi.org/ 10.1186/s40648-019-0141-2.

Ferna ?ndez-Quintanilla, C., Pen ?a, J.M., Andu?jar, D., Dorado, J., Ribeiro, A., Lo ?pez- Granados, F., 2018. Is the current state of the art of weed monitoring suitable for site-specific weed management in arable crops? Weed Res. 58, 259–272. https://doi. org/10.1111/wre.12307.

Liu, J., Abbas, I., Noor, R.S., 2021. Development of deep learning-based variable rate agrochemical spraying system for targeted weed control in the strawberry crop. Agronomy 11. https://doi.org/10.3390/agronomy11081480.

JingLei Tang, Dong Wang, ZhiGuang Zhang, LiJun He, Jing Xin, Yang Xu, Weed identification based on K-means feature learning combined with convolutional neural network, Computers and Electronics in Agriculture, Volume 135, 2017, Pages 63-70, ISSN 0168-1699, https://doi.org/10.1016/j.compag.2017.01.001.

Aichen Wang, Wen Zhang, Xinhua Wei, A review on weed detection using ground-based machine vision and image processing techniques, Computers and Electronics in Agriculture, Volume 158, 2019, Pages 226-240, ISSN 0168-1699, https://doi.org/10.1016/j.compag.2019.02.005.

Singh, A., Ganapathysubramanian, B., Singh, A. K., & Sarkar, S. (2016). Machine Learning for High-Throughput Stress Phenotyping in Plants. Trends in Plant Science, 21(2), 110-124. https://doi.org/10.1016/j.tplants.2015.10.015

Torres-Sánchez, J.; de Castro, A.I.; Peña, J.M.; Jiménez-Brenes, F.M.; Arquero, O.; Lovera, M.; López-Granados, F. Mapping the 3D structure of almond trees using UAV acquired photogrammetric point clouds and object-based image analysis. Biosyst. Eng. 2018, 176, 172–184.

Saiz-Rubio V, Rovira-Más F. From Smart Farming towards Agriculture 5.0: A Review on Crop Data Management. Agronomy. 2020; 10(2):207. https://doi.org/10.3390/agronomy10020207.

Yao H, Qin R, Chen X. Unmanned Aerial Vehicle for Remote Sensing Applications—A Review. Remote Sensing. 2019; 11(12):1443. https://doi.org/10.3390/rs11121443.

Lu, H., Fu, X., Liu, C. et al. Cultivated land information extraction in UAV imagery based on deep convolutional neural network and transfer learning. J. Mt. Sci. 14, 731–741 (2017). https://doi.org/10.1007/s11629-016-3950-2.

Milioto, A., Lottes, P., and Stachniss, C.: REAL-TIME BLOB-WISE SUGAR BEETS VS WEEDS CLASSIFICATION FOR MONITORING FIELDS USING CONVOLUTIONAL NEURAL NETWORKS, ISPRS Ann. Photogramm. Remote Sens. Spatial Inf. Sci., IV-2/W3, 41–48, https://doi.org/10.5194/isprs-annals-IV-2-W3-41-2017, 2017.

Najmeh Razfar, Julian True, Rodina Bassiouny, Vishaal Venkatesh, Rasha Kashef, Weed detection in soybean crops using custom lightweight deep learning models, Journal of Agriculture and Food Research, Volume 8, 2022, 100308, ISSN 2666-1543, https://doi.org/10.1016/j.jafr.2022.100308.

Borisov A.A., Semenov S.S., Arzamasov K.M. Transfer Learning for automated search for defects on chest X-rays. Medical Visualization. 2023;27(1):158-169. (In Russ.) https://doi.org/10.24835/1607-0763-1243.

Kanjanasurat I, Tenghongsakul K, Purahong B, Lasakul A. CNN–RNN Network Integration for the Diagnosis of COVID-19 Using Chest X-ray and CT Images. Sensors. 2023; 23(3):1356. https://doi.org/10.3390/s23031356.

Chen S, Dai D, Zheng J, Kang H, Wang D, Zheng X, Gu X, Mo J and Luo Z (2023) Intelligent grading method for walnut kernels based on deep learning and physiological indicators. Front. Nutr. 9:1075781. doi: 10.3389/fnut.2022.1075781.

Laishram M, Mandal S, Haldar A, Das S, Bera S, Samanta R. Biometric identification of Black Bengal goat: unique iris pattern matching system vs deep learning approach Anim Biosci 2023;36(6):980-989.DOI: https://doi.org/10.5713/ab.22.0157.

Y. Wang, J. Zhang, M. Kan, S. Shan and X. Chen, "Self-Supervised Equivariant Attention Mechanism for Weakly Supervised Semantic Segmentation," 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 2020, pp. 12272-12281, doi: 10.1109/CVPR42600.2020.01229.

Mondal, D., & Patil, S. S. (2022). EEG Signal Classification with Machine Learning model using PCA feature selection with Modified Hilbert transformation for Brain-Computer Interface Application. Machine Learning Applications in Engineering Education and Management, 2(1), 11–19. Retrieved from http://yashikajournals.com/index.php/mlaeem/article/view/20

H. Fukui, T. Hirakawa, T. Yamashita and H. Fujiyoshi, "Attention Branch Network: Learning of Attention Mechanism for Visual Explanation," 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 2019, pp. 10697-10706, doi: 10.1109/CVPR.2019.01096.

Russo, L., Kami?ska, K., Christensen, M., Martínez, L., & Costa, A. Machine Learning for Real-Time Decision Support in Engineering Operations. Kuwait Journal of Machine Learning, 1(2). Retrieved from http://kuwaitjournals.com/index.php/kjml/article/view/117

Prof. Nikhil Surkar. (2015). Design and Analysis of Optimized Fin-FETs. International Journal of New Practices in Management and Engineering, 4(04), 01 - 06. Retrieved from http://ijnpme.org/index.php/IJNPME/article/view/39

Ranjbarzadeh, R., Bagherian Kasgari, A., Jafarzadeh Ghoushchi, S. et al. Brain tumor segmentation based on deep learning and an attention mechanism using MRI multi-modalities brain images. Sci Rep 11, 10930 (2021). https://doi.org/10.1038/s41598-021-90428-8.

Laishram M, Mandal S, Haldar A, Das S, Bera S, Samanta R. Biometric identification of Black Bengal goat: unique iris pattern matching system vs deep learning approach Anim Biosci 2023;36(6):980-989. DOI: https://doi.org/10.5713/ab.22.0157

dos Santos Ferreira, Alessandro; Pistori, Hemerson; Matte Freitas, Daniel; Gonçalves da Silva, Gercina (2017), “Data for: Weed Detection in Soybean Crops Using ConvNets”, Mendeley Data, V2, doi: 10.17632/3fmjm7ncc6.2.