Bidirectional Encoder Representations Transformers for Improving CNN-LSTM Covid-19 Disease Detection Classifier

Main Article Content

Abstract

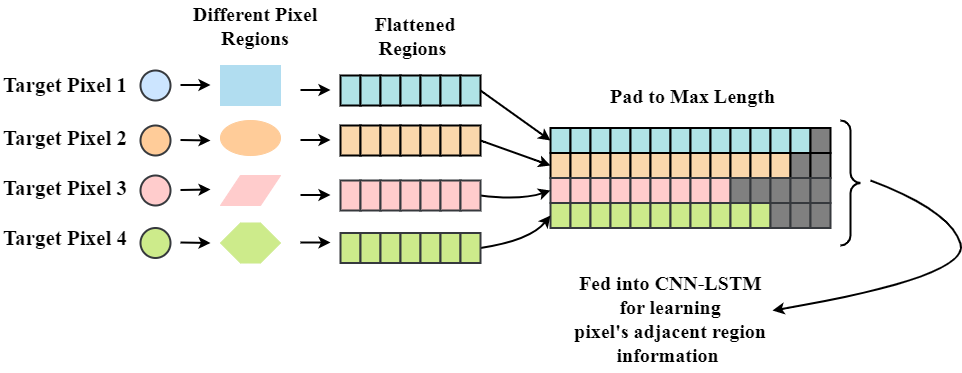

Early identification of COVID-19 diseased persons is crucial to avoid and prevent the transmission of the SARS-CoV-2 virus. To achieve this, lung Computed Tomography (CT) scan segmentation and categorization models have been broadly developed for COVID-19 diagnosis. Amongst, Multi-Scale function learning with an Attention-based UNet and Marginal Space Deep Ambiguity-attentive Transfer Learning (MS-AUNet-MSDATL) framework is developed to concurrently segment the COVID-19 infected regions and classify their risk levels from the CT/Chest X-Ray (CXR) scans. This model utilizes Convolutional Neural Network–Long Short Term Memory (CNN-LSTM) as a classifier for proper recognition. Although CNN-LSTM efficiently learns the spatial and temporal data, but it highly ignores the pixels and their adjacent information which results in lower classification rate. So, in this paper, Bidirectional Encoder Representations from Transformers (BERT) is introduced along with CNN-LSTM in MS-AUNet-MSDATL model to resolve the above mentioned issues for efficient COVID-19 risk level classification. Initially, the segmented CT and CXR images from MS-AUNet is given as input to the BERT model. BERT structure consist of stack of transformer encoder layers which extracts fixed features from a pre-trained models to obtain the numerical representation of the given image. Then, the numerical expressions from the BERT are transformed to pre-learned CNN model for selecting the important features from the given representations. The LSTM model receives the CNN output and generates a new representation based on the data order. In addition, Fully Connected Layer (FCL) maps the CNN-LSTM results into categorization classes to learn pixels and their adjacent information for COVID-19 risk level detection and diagnosis. The complete work is termed as MS-AUNet-EMSDATL. Finally, the test findings shows that the MS-AUNet-MS-B-DATL achieves accuracy of 98.67% and 98.55% on CT and CXR images compared to the other existing frameworks.