Hast Mudra: Hand Sign Gesture Recognition Using LSTM

Main Article Content

Abstract

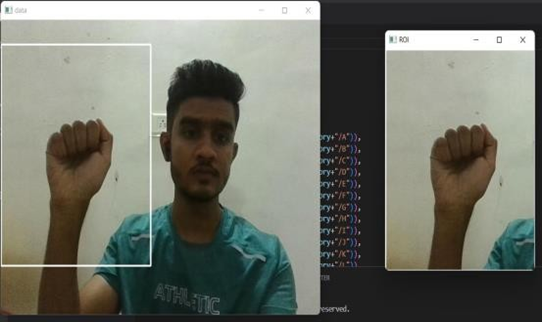

Even though using the most natural way of communication is sign language, deaf and mute people find it challenging to socialize. A language barrier is erected between regular people and D&M individuals due to the structure of sign language, which is distinct from text. They converse by using vision-based communication as a result. The gestures can be easily understood by others if there is a standard interface that transforms sign language to visible text. As a result, R&D has been done on a vision-based interface system that will allow D&M persons to communicate without understanding one another's languages. In this project, first gathered and acquired data and created a dataset, after which extracting useful data from the images. Keywords After verification and trained data and model using the (LSTM) algorithm, TensorFlow, and Keras technology, classified the gestures according to alphabet. Using our own dataset, this system achieved an accuracy of around 86.75% in an experimental test. system uses the (LSTM) algorithm to process images and data.

Article Details

References

Ashok K Sahoo, Gouri Sankar Mishra and Kiran Kumar Ravulakollu (2014)- Sign language recognition-State of the art

Anup Kumar, Karun Thankachan and Mevin M. Dominic(2016)- Sign Language Recognition

Tülay Karaylian, Özkan KÕlÕç (2017)- Sign Language Recognition

Oyeniran, Oluwashina A, Oyeniyi, Joshua O, Sotonwa, Kehinde A,Ojo, Adeolu O.(2020)- Review Of The Application Of Artificial Intelligence In Sign Language Recognition System

R Rumana, Reddygari Sandhya Rani, Mrs. R. Prema (2021)- A Review Paper on Sign Language Recognition for The Deaf and Dumb

V. N. T. Truong, C. K. Yang and Q. V. Tran (2016)- A Translater For American Sign Language To Text And Speech

M. M. Islam, S. Siddiqua and J. Afnan (2017)- Real Time Hand Gesture Recognition Using Different Algorithms Based On American Sign Language

I. C. Ani, A. D. Rosli, R. Baharudin, M. H. Abbas and M. F. Abdullah(2014)-

Preliminary Study Of Recognising Alphabet Letter Via Hand Gesture

Rajshri Bhandra & Subhajit Kar- Sign Language Detection from Hand Gesture Images using Deep Multi-layered Convolution Neural Network. (2021)

Vishwa Hariharan Iyer, U.M Prakash, Aashrut Vijay, P.Sathishkumar – Sign Language Detection using Action Recognition(2022)

Tengfei Li Yongmeng Yan; Wenqing Du - Sign Language Recognition Based on Computer Vision(2022)

Rahib Abiyev; John Bush Idoko; Murat Arslan - Reconstruction of Convolutional Neural Network for Sign Language Recognition (2020)

Priyanka Pankajakshan; Thilagavathi B-Sign language recognition system(2015)

S.A.M.A.S Senanayaka; R.A.D.B.S Perera; W. Rankothge; S.S. Usgalhewa; H.D Hettihewa; P.K.W. Abeygunawardhana-Continuous American Sign Language Recognition Using Computer Vision And Deep Learning Technologies (2022)

Nipun Jindal; Nilesh Yadav; Nishant Nirvan; Dinesh Kumar- Sign Language Detection using Convolutional Neural Network (CNN),(2022)