Deep Reinforcement Learning Framework with Q Learning For Optimal Scheduling in Cloud Computing

Main Article Content

Abstract

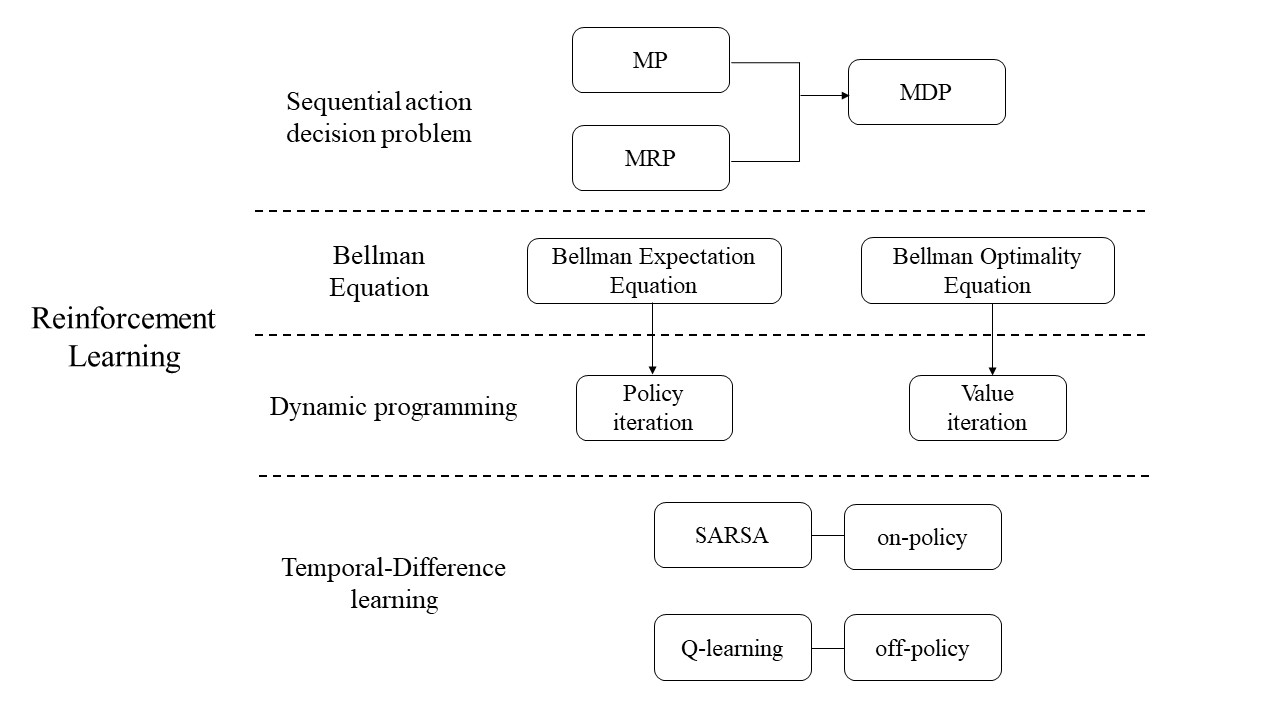

Cloud computing is an emerging technology that is increasingly being appreciated for its diverse uses, encompassing data processing, The Internet of Things (IoT) and the storing of data. The continuous growth in the number of cloud users and the widespread use of IoT devices have resulted in a significant increase in the volume of data being generated by these users and the integration of IoT devices with cloud platforms. The process of managing data stored in the cloud has become more challenging to complete. There are numerous significant challenges that must be overcome in the process of migrating all data to cloud-hosted data centers. High bandwidth consumption, longer wait times, greater costs, and greater energy consumption are only some of the difficulties that must be overcome. Cloud computing, as a result, is able to allot resources in line with the specific actions made by users, which is a result of the conclusion that was mentioned earlier. This phenomenon can be attributed to the provision of a superior Quality of Service (QoS) to clients or users, with an optimal response time. Additionally, adherence to the established Service Level Agreement further contributes to this outcome. Due to this circumstance, it is of utmost need to effectively use the computational resources at hand, hence requiring the formulation of an optimal approach for task scheduling. The goal of this proposed study is to find ways to allocate and schedule cloud-based virtual machines (VMs) and tasks in such a way as to reduce completion times and associated costs. This study presents a new method of scheduling that makes use of Q-Learning to optimize the utilization of resources.The algorithm's primary goals include optimizing its objective function, building the ideal network, and utilizing experience replay techniques.

Article Details

References

A. Ullah, N. M. Nawi, and S. Ouhame, "Recent advancement in VM task allocation system for cloud computing: review from 2015 to2021," Artificial Intelligence Review, vol. 55, pp. 2529–2573, 2022.

A. J. Ferrer, J. M. Marquès,and J. Jorba,"Towards the Decentralised Cloud: Survey on Approaches and Challenges for Mobile, Ad hoc, and Edge Computing,"ACM Computing Surveys, Vol. 51, pp. 1–36,2019.

R. Nazir et al., "Cloud Computing Applications: A Review," EAI Endorsed Transactions on Cloud Systems , Vol. 6, pp. e5-e5,2020.

V. V., J. B. G.,and S. Rajagopal,"Review on Mapping of Tasks to Resources in Cloud Computing,"International Journal of Cloud Applications and Computing (IJCAC), vol. 12(1), pp.1-17, 2022.

B. Zheng, L. Pan,and S. Liu,"Market-oriented online bi-objective service scheduling for pleasingly parallel jobs with variable resources in cloud environments,"Journal of Systems and Software, vol. 176, June 2021.

W. Song,Z. Xiao,Q. Chen,and H. Luo,"Adaptive Resource Provisioning for the Cloud Using Online Bin Packing,"IEEE Transactions on Computers, vol. 63(11), pp.2647-2660,July 2013.

R. Grandl, G. Ananthanarayanan, S. Kandula, S. Rao,and A. Akella,"Multi-resource packing for cluster schedulers,"ACM SIGCOMM Computer Communication Review, vol. 44(4), pp. 455–466,August 2014.

A. Ghodsi, M. Zaharia, B. Hindman, A. Konwinski, S. Shenker,and I. Stoica,"Dominant resource fairness: Fair allocation of multiple resource types,"8th USENIX symposium on networked systems design and implementation (NSDI 11), 2011.

Y. Xie,Y.Sheng,M. Qiu,and F. Gui,"An adaptive decoding biased random key genetic algorithm for cloud workflow scheduling,"Engineering Applications of Artificial Intelligence, vol. 112, p.104879, June 2022.

M. S. Ajmal,Z. Iqbal,F. Z. Khan,M. Ahmad,I. Ahmad, and B. B. Gupta," Hybrid ant genetic algorithm for efficient task scheduling in cloud data centers,"Computers and Electrical Engineering, vol. 95, p.107419,October 2021.

H. Mao,M. Alizadeh,I. Menache,and S. Kandula,"Resource Management with Deep Reinforcement Learning,"HotNets'16, pp. 50–56,November 2016.

H. Lee,S. Cho,Y. Jang,J. Lee,and H. Woo,"A Global DAG Task Scheduler Using Deep Reinforcement Learning and Graph Convolution Network,"IEEE Access, 9, pp. 158548-158561,November 2021.

Z. Peng, D. Cui, J. Zuo, Q. Li, B. Xu and W. Lin,"Random task scheduling scheme based on reinforcement learning in cloud computing,"Cluster Computing, vol. 18, pp.1595–1607, September 2015.

P. Pradhan , P. K. Behera,and B.N.B. Ray,"Modified Round Robin Algorithm for Resource Allocation in Cloud Computing,"Procedia Computer Science,vol. 85, pp. 878-890,June 2016.

T. Chen, A. G. Marques,and G. B. Giannakis,"DGLB: Distributed Stochastic Geographical Load Balancing over Cloud Networks,"IEEE Transactions on Parallel and Distributed Systems,vol.28(7),pp.1866-1880,December 2016.

M. G. Arani and A. Souri,"LP-WSC: a linear programming approach for web service composition in geographically distributed cloud environments,"The Journal of Supercomputing, vol. 75, pp.2603–2628, October 2018.

M. G. Arani, S. Jabbehdari and M. A. Pourmina,"An autonomic approach for resource provisioning of cloud services," Cluster Computing, vol. 19, pp.1017–1036, May 2016.

D. Basu, X. Wang, Y. Hong, H. Chen,and S. Bressan,"Learn-as-you-go with Megh: Efficient Live Migration of Virtual Machines,"IEEE Transactions on Parallel and Distributed Systems,volume. 30(8), pp.1786-1801,August 2019.

M. Kumar and S.C. Sharma,"PSO-COGENT: Cost and energy efficient scheduling in cloud environment with deadline constraint,"Sustainable Computing: Informatics and Systems, vol. 19, pp. 147-164,September 2018.

H. Z. Jin, L. Yang,and O. Hao,"Scheduling strategy based on genetic algorithm for Cloud computer energy optimization,"2015 IEEE International Conference on Communication Problem-Solving (ICCP),pp. 516-519,October 2015.

R. Medara, R. S. Singh,and Amit,"Energy-aware workflow task scheduling in clouds with virtual machine consolidation using discrete water wave optimization,"Simulation Modelling Practice and Theory,vol. 110,p.102323, July 2021.

D. Ding, X. Fan, Y.Zhao, K. Kang, Q. Yin, and J. Zeng,"Q-learning based dynamic task scheduling for energy-efficient cloud computing," Future Generation Computer Systems, vol. 108, pp.361-371, 2020.

T. Dong, F. Xue, C. Xiao,and J. Li,"Task scheduling based on deep reinforcement learning in a cloud manufacturing environment,"Concurrency and Computation: Practice and Experience, vol. 32(11), p.e5654,January 2020.

F. Cheng et al.,"Cost-aware job scheduling for cloud instances using deep reinforcement learning," Cluster Computing, vol. 25, pp.619–631, 2022.

L. Cheng et al.,"Cost-aware real-time job scheduling for hybrid cloud using deep reinforcement learning,"Neural Computing and Applications, vol. 34, pp.18579–18593, June 2022.

J. Yan et al.,"Energy-aware systems for real-time job scheduling in cloud data centers: A deep reinforcement learning approach,"Computers and Electrical Engineering,vol. 99,p.107688, April 2022.

Y. Wei, L. Pan, S. Liu, L. Wu,and X. Meng,"DRL-Scheduling: An Intelligent QoS-Aware Job Scheduling Framework for Applications in Clouds,"IEEE Access,vol. 6,pp.55112-55125,September 2018.

Y. Huang et al.,"Deep Adversarial Imitation Reinforcement Learning for QoS-Aware Cloud Job Scheduling,"IEEE Systems Journal, vol. 16(3),pp. 4232-4242,November 2021.

Z. Cao, P. Zhou, R. Li, S. Huang,and D. Wu,"Multiagent Deep Reinforcement Learning for Joint Multichannel Access and Task Offloading of Mobile-Edge Computing in Industry 4.0,"IEEE Internet of Things Journal,vol. 7(7), pp. 6201-6213,March 2020.

W. Guo, W. Tian, Y. Ye, L. Xu,and K. Wu,"Cloud Resource Scheduling With Deep Reinforcement Learning and Imitation Learning,"IEEE Internet of Things Journal,vol.8(5),pp.3576-3586,September 2020.

N. Liu et al.,"A Hierarchical Framework of Cloud Resource Allocation and Power Management Using Deep Reinforcement Learning,"IEEE 37th international conference on distributed computing systems (ICDCS),pp. 372-382,2017.

W. Wang et al.," Virtual machine placement and workload assignment for mobile edge computing ," IEEE 6th International Conference on Cloud Networking (CloudNet),pp. 1-6,2017.

C. C. White III,and D. J. White,"Markov decision processes,"European Journal of Operational Research,vol. 39(1), pp.1-16,1989.

R. Sutton,"Generalization in reinforcement learning: Successful examples using sparse coarse coding," Advances in neural information processing systems,1995.

E. E.Dar, Y. Mansour,and P Bartlett,"Learning Rates for Q-learning," Journal of machine learning Research, vol. 5, pp.1-25, 2003.

A.R. Cassandra,"Exact and approximate algorithms for partially observable Markov decision processes," Brown University?ProQuest Dissertations Publishing,1998.

M. L. Littman,"Value-function reinforcement learning in Markov games," Cognitive Systems Research,vol. 2(1), pp.55-66,April 2001.

R. Sutton, D. McAllester, S. Singh,and Y Mansour,"Policy gradient methods for reinforcement learning with function approximation,"Advances in neural information processing systems, 1999.

T. G. Dietterich,"Hierarchical Reinforcement Learning with the MAXQ Value Function Decomposition," Journal of artificial intelligence research, vol. 13, pp.227-303,November 2000.

M. Irodova and R. H. Sloan,"Reinforcement Learning and Function Approximation,"The Florida AI Research Society Conference,pp. 455-460,2005.

L. Shoufeng,L. Ximin,and D. Shiqiang,"Q-Learning for Adaptive Traffic Signal Control Based on Delay Minimization Strategy,"IEEE International Conference on Networking, Sensing and Control,pp. 687-691,April 2008.