Blood Pressure Estimation from Speech Recordings: Exploring the Role of Voice-over Artists

Main Article Content

Abstract

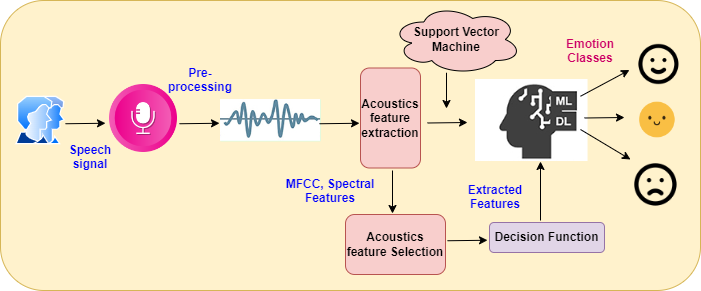

Hypertension, a prevalent global health concern, is associated with cardiovascular diseases and significant morbidity and mortality. Accurate and prompt Blood Pressure monitoring is crucial for early detection and successful management. Traditional cuff-based methods can be inconvenient, leading to the exploration of non-invasive and continuous estimation methods. This research aims to bridge the gap between speech processing and health monitoring by investigating the relationship between speech recordings and Blood Pressure estimation. Speech recordings offer promise for non-invasive Blood Pressure estimation due to the potential link between vocal characteristics and physiological responses. In this study, we focus on the role of Voice-over Artists, known for their ability to convey emotions through voice. By exploring the expertise of Voice-over Artists in controlling speech and expressing emotions, we seek valuable insights into the potential correlation between speech characteristics and Blood Pressure. This research sheds light on presenting an innovative and convenient approach to health assessment. By unraveling the specific role of Voice-over Artists in this process, the study lays the foundation for future advancements in healthcare and human-robot interactions. Through the exploration of speech characteristics and emotional expression, this investigation offers valuable insights into the correlation between vocal features and Blood Pressure levels. By leveraging the expertise of Voice-over Artists in conveying emotions through voice, this study enriches our understanding of the intricate relationship between speech recordings and physiological responses, opening new avenues for the integration of voice-related factors in healthcare technologies.

Article Details

References

Argha, A., Celler, B. G., & Lovell, N. H. (2021). A Novel Automated Blood Pressure Estimation Algorithm Using Sequences of Korotkoff Sounds. IEEE Journal of Biomedical and Health Informatics, 25(4), 1257–1264. https://doi.org/10.1109/JBHI.2020.3012567

Badshah, A. M., Rahim, N., Ullah, N., Ahmad, J., Muhammad, K., Lee, M. Y., Kwon, S., & Baik, S. W. (2019). Deep features-based speech emotion recognition for smart affective services. Multimedia Tools and Applications, 78(5), 5571–5589. https://doi.org/10.1007/s11042-017-5292-7

Farki, A., Kazemzadeh, R. B., & Noughabi, E. A. (2021). A Novel Clustering-Based Algorithm for Continuous and Non-invasive Cuff-Less Blood Pressure Estimation. In arXiv physics.med-ph]. arXiv. http://arxiv.org/abs/2110.06996

Harfiya, L. N., Chang, C.-C., & Li, Y.-H. (2021). Continuous Blood Pressure Estimation Using Exclusively Photopletysmography by LSTM-Based Signal-to-Signal Translation. Sensors , 21(9). https://doi.org/10.3390/s21092952

Yang, S., Zhang, Y., Cho, S.-Y., Correia, R., & Morgan, S. P. (2021). Non-invasive cuff-less Blood Pressure estimation using a hybrid deep learning model. In Optical and Quantum Electronics (Vol. 53, Issue 2). https://doi.org/10.1007/s11082-020-02667-0

Mottaghi, S., Moradi, M. H., & Moghavvemi, M. (2014). Neuro-fuzzy Indirect Blood Pressure Estimation during Bruce Stress Test.

Guizzo, E., Weyde, T., & Leveson, J. B. (2020). Multi-Time-Scale Convolution for Emotion Recognition from Speech Audio Signals. ICASSP 2020 - 2020 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), 6489–6493. https://doi.org/10.1109/ICASSP40776.2020.9053727

Mustaqeem, Mustaqeem, Sajjad, M., & Kwon, S. (2020). Clustering-Based Speech Emotion Recognition by Incorporating Learned Features and Deep BiLSTM. In IEEE Access (Vol. 8, pp. 79861–79875). https://doi.org/10.1109/access.2020.2990405

Martínez, G., Howard, N., Abbott, D., Lim, K., Ward, R., & Elgendi, M. (2018). Can Photoplethysmography Replace Arterial Blood Pressure in the Assessment of Blood Pressure? Journal of Clinical Medicine Research, 7(10). https://doi.org/10.3390/jcm7100316

Sakai, M. (2015a). Case study on analysis of vocal frequency to estimate Blood Pressure. 2015 IEEE Congress on Evolutionary Computation, CEC 2015 - Proceedings, 00(c), 2335–2340. https://doi.org/10.1109/CEC.2015.7257173

Sakai, M. (2015b). Modeling the Relationship between Heart Rate and Features of Vocal Frequency. International Journal of Computer Applications, 120(6), 32–37. https://doi.org/10.5120/21233-3986

Ogedegbe, G., & Pickering, T. (2010). Principles and Techniques of Blood Pressure Measurement. Cardiology Clinics, 28(4), 571–586. https://doi.org/10.1016/j.ccl.2010.07.006

Pechprasarn, S., Sukkasem, C., Sasivimolkul, S., & Suvarnaphaet, P. (2019). Machine Learning to identify factors that affect Human Systolic Blood Pressure. BMEiCON 2019 - 12th Biomedical Engineering International Conference. https://doi.org/10.1109/BMEiCON47515.2019.8990356

Ryskaliyev, A., Askaruly, S., & James, A. P. (2016). Speech signal analysis for the estimation of heart rates under different emotional states. 2016 International Conference on Advances in Computing, Communications and Informatics, ICACCI 2016, 1160–1165. https://doi.org/10.1109/ICACCI.2016.7732201

Krishna Kishore, K. V., & Krishna Satish, P. (2013). Emotion recognition in speech using MFCC and wavelet features. Proceedings of the 2013 3rd IEEE International Advance Computing Conference, IACC 2013, 842–847. https://doi.org/10.1109/IAdCC.2013.6514336

Kachuee, M., Kiani, M. M., Mohammadzade, H., & Shabany, M. (2017). Cuffless Blood Pressure Estimation Algorithms for Continuous Health-Care Monitoring. IEEE Transactions on Biomedical Engineering, 64(4), 859–869. https://doi.org/10.1109/TBME.2016.2580904

El Ayadi, M., Kamel, M. S., & Karray, F. (2011). Survey on speech emotion recognition: Features, classification schemes, and databases. Pattern Recognition, 44(3), 572–587. https://doi.org/10.1016/j.patcog.2010.09.020

Aich, S., Kim, H. -., Younga, K., Hui, K. L., Al-Absi, A. A., & Sain, M. (2019). A supervised machine learning approach using different feature selection techniques on voice datasets for prediction of parkinson's disease. Paper presented at the International Conference on Advanced Communication Technology, ICACT, 2019-February 1116-1121. doi:10.23919/ICACT.2019.8701961 Retrieved from www.scopus.com

Mustaqeem, Mustaqeem, & Kwon, S. (2021). MLT-DNet: Speech emotion recognition using 1D dilated CNN based on multi-learning trick approach. In Expert Systems with Applications (Vol. 167, p. 114177). https://doi.org/10.1016/j.eswa.2020.114177

Nie, W., Ren, M., Nie, J., & Zhao, S. (2021). C-GCN: Correlation Based Graph Convolutional Network for Audio-Video Emotion Recognition. IEEE Transactions on Multimedia, 23, 3793–3804. https://doi.org/10.1109/TMM.2020.3032037

Mustaqeem, & Kwon, S. (2019). A CNN-Assisted Enhanced Audio Signal Processing for Speech Emotion Recognition. Sensors , 20(1). https://doi.org/10.3390/s20010183

Fahad Nasser Alhazmi. (2023). Self-Efficacy and Personal Innovation: Conceptual Model Effects on Patients’ Perceptions of PHR Use in Saudi Arabia. International Journal of Intelligent Systems and Applications in Engineering, 11(4s), 369–376. Retrieved from https://ijisae.org/index.php/IJISAE/article/view/2676

Alkan, A., & Günay, M. (2012). Identification of EMG signals using discriminant analysis and SVM classifier. Expert Systems with Applications, 39(1), 44–47. https://doi.org/10.1016/j.eswa.2011.06.043

Pan, Y., Shen, P., & Shen, L. (2012). Speech emotion recognition using support vector machine. International Journal of Smart Home, 6(2), 101–108. https://doi.org/10.1109/kst.2013.6512793

Mustaqeem, Mustaqeem, & Kwon, S. (2020). CLSTM: Deep Feature-Based Speech Emotion Recognition Using the Hierarchical ConvLSTM Network. In Mathematics (Vol. 8, Issue 12, p. 2133). https://doi.org/10.3390/math8122133

Song, K., Park, T.-J., & Chang, J.-H. (2021). Novel Data Augmentation Employing Multivariate Gaussian Distribution for Neural Network-Based Blood Pressure Estimation. NATO Advanced Science Institutes Series E: Applied Sciences, 11(9), 3923. https://doi.org/10.3390/app11093923

Y., A., A., K., A., Y., & Pandya, N. (2017). Survey paper on Different Speech Recognition Algorithm: Challenges and Techniques. International Journal on Speech Communications, 175(1), 31–36. https://doi.org/10.5120/ijca2017915472

Shakti P.Rath(2017). Scalable algorithms for unsupervised clustering of acoustic data for speech recognition. Computer Speech & Language. Volume 46, November 2017, Pages 233-248. https://doi.org/10.1016/j.csl.2017.06.001

T. B. Amin, P. Marziliano and J. S. German, "Nine Voices, One Artist: Linguistic and Acoustic Analysis," 2012 IEEE International Conference on Multimedia and Expo, Melbourne, VIC, Australia, 2012, pp. 450-454, doi: 10.1109/ICME.2012.142.

N. Obin and A. Roebel, "Similarity Search of Acted Voices for Automatic Voice Casting," in IEEE/ACM Transactions on Audio, Speech, and Language Processing, vol. 24, no. 9, pp. 1642-1651, Sept. 2016, doi: 10.1109/TASLP.2016.2580302.

Phalguni Phatak, Sonia Shaikh, Neha Jamdhade & Pallavi Sovani Kelkar (2021) Do Voice-Over Artists Convey Emotion Better Than Untrained Voice Users?, Voice and Speech Review, 15:3, 315-329, DOI: 10.1080/23268263.2021.1882751

Duan, KB., Rajapakse, J.C., Nguyen, M.N. (2007). One-Versus-One and One-Versus-All Multiclass SVM-RFE for Gene Selection in Cancer Classification. In: Marchiori, E., Moore, J.H., Rajapakse, J.C. (eds) Evolutionary Computation,Machine Learning and Data Mining in Bioinformatics. EvoBIO 2007. Lecture Notes in Computer Science, vol 4447. Springer, Berlin, Heidelberg. https://doi.org/10.1007/978-3-540-71783-6_5

Dhabliya, M. D. . (2021). Cloud Computing Security Optimization via Algorithm Implementation. International Journal of New Practices in Management and Engineering, 10(01), 22–24. https://doi.org/10.17762/ijnpme.v10i01.99

Hong Feng and Xunbing Shen. 2022. A random forest algorithm-based emotion recognition model for eye features. In Proceedings of the 3rd International Symposium on Artificial Intelligence for Medicine Sciences (ISAIMS '22). Association for Computing Machinery, New York, NY, USA, 148–152. https://doi.org/10.1145/3570773.3570851

Hariharan Muthusamy, Kemal Polat, Sazali Yaacob, "Improved Emotion Recognition Using Gaussian Mixture Model and Extreme Learning Machine in Speech and Glottal Signals", Mathematical Problems in Engineering, vol. 2015, Article ID 394083, 13 pages, 2015. https://doi.org/10.1155/2015/394083

Xing, W., & Bei, Y. (2020). Medical Health Big Data Classification Based on KNN Classification Algorithm. IEEE Access, 8, 28808–28819. https://doi.org/10.1109/access.2019.2955754

An, Y., Sun, S., & Wang, S. (2017). Naive Bayes classifiers for music emotion classification based on lyrics. https://doi.org/10.1109/icis.2017.7960070

Mark White, Thomas Wood, Carlos Rodríguez, Pekka Koskinen, Jónsson Ólafur. Exploring Natural Language Processing in Educational Applications. Kuwait Journal of Machine Learning, 2(1). Retrieved from http://kuwaitjournals.com/index.php/kjml/article/view/168

Liu, Z., Wu, M., Cao, W., Mao, J., Xu, J., & Tan, G. (2018). Speech emotion recognition based on feature selection and extreme learning machine decision tree. Neurocomputing, 273, 271–280. https://doi.org/10.1016/j.neucom.2017.07.050

Zhang, W. ., & Chung Ee, J. Y. . (2023). AnIntelligent Knowledge Graph-Based Directional Data Clustering and Feature Selection for Improved Education. International Journal on Recent and Innovation Trends in Computing and Communication, 11(6s), 22–33. https://doi.org/10.17762/ijritcc.v11i6s.6807

38. Velunachiyar, S., & Sivakumar, K. (2023). Some Clustering Methods, Algorithms and their Applications. International Journal on Recent and Innovation Trends in Computing and Communication, 11(6s),401–410. https://doi.org/10.17762/ijritcc.v11i6s.6946